This Is Why Deepfake Videos Are Women’s Worst Nightmare

If you’re anything like me, when you have seen “AI” casually mentioned on social media, you might have assumed people were just talking about some guy named “AL” or some new type of new computer system. You probably didn’t pay any mind to it because the internet bombards us with so much information that it’s easy to tune things out.

It wasn’t until I dug deep that I not only learned “AI” stands for Artificial Intelligence but also that people are using it to be destructive, exploitive and manipulative. Sure, Artificial Intelligence has been a thing since before our birth, but this latest development in AI is dangerous, especially when it comes to women. This type of Artificial Intelligence is known as deepfake video.

Deepfake videos are manufactured to show real people saying or doing things that they usually wouldn’t say or do. According to CBS, deepfake videos “are made by feeding a computer an algorithm, or set of instructions, lots of images and audio of a certain person. The computer program learns how to mimic the person’s facial expressions, mannerisms, voice and inflections. If you have enough video and audio of someone, you can combine a fake video of the person with fake audio and get them to say anything you want.”

This is how deepfakes got its name. Even if you don’t use a computer, there is software available like FakeApp, that can be used to create such videos.

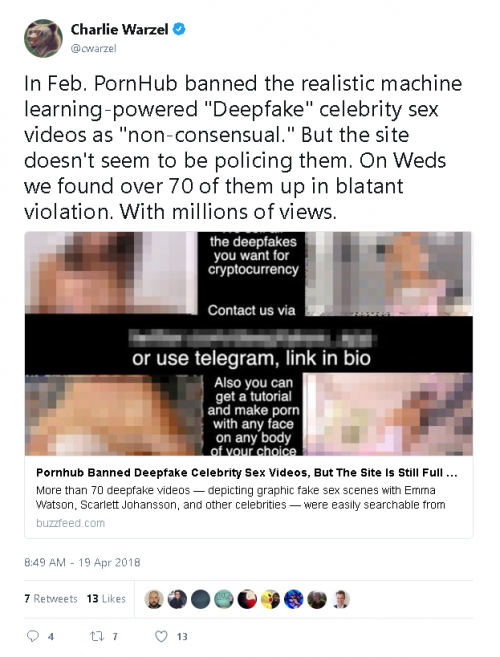

Deepfake videos are causing a lot of concern and mayhem because people have been creating them with politicians pushing specific agendas and celebrities engaging in porn. The development of deepfake videos was so rapid on Pornhub and Twitter that other sites quickly banned said videos and Reddit closed a handful of deepfake groups, including one with nearly 100,000 members, according to the New York Times.

The most significant concern, however, is how abusers can potentially use deepfake videos to manipulate their victims into staying with them. Just as people have created deepfake videos of celebrities engaging in porn, abusers now have the capabilities to develop deepfake videos of their girlfriends or ex-girlfriends engaging in non-consensual porn or revenge porn.

According to Mashable, there hasn’t been a documented case where a victim’s abuser created non-consensual deepfake porn videos. However, advocates such as Adam Dodge, legal director of the domestic violence agency Laura’s House, and Erica Johnstone, partner of a law firm and co-founder of the nonprofit organization Without My Consent, believe that scenario is approaching.

These advocates are discussing the growth of this potential issue now because they witnessed how the law and legal aids struggled to respond to the escalation of non-consensual porn. Thus, they want to prevent the same thing from happening with pornographic deepfake videos.

Unfortunately, some states have yet to be proactive about this issue. This makes many women cringe because it’s no longer about being super vigilant about avoiding sending nudes to partners. It’s deeper than that. But thankfully, all is not lost.

According to Johnstone and Dodge, if you think you’re a victim of a deepfake video [or revenge porn] you can make your social media accounts private, ask family and friends to remove or limit access to photos that include you and use Google search to identify public photos and videos for removal. If you don’t think your partner would engage in such technology or activities, some warning signs to keep in mind are if a partner consistently asks for access to your phone or social media outlets, downloads a cache of personal photos as well as frequent requests you to pose for images or videos. Johnstone also recommends setting “house rules” on a case-by-case basis about when photos are taken and in certain circumstances.

There are no federal laws that protect victims of non-consensual porn nor are there state laws that include commercial pornography in their policies against revenge porn. However, Carrie Goldberg, a prominent New York lawyer, who has taken on nonconsensual porn cases in the past, recommends civil lawyers use “creative tools” like copyright infringement and defamation suits to seek justice for their clients, according to Mashable.

If you or someone you know feels like they have been victim to a pornographic deepfake video, nonconsensual porn or revenge porn, please seek legal counsel to understand your options, review this checklist to support your legal efforts or contact your local authorities. You can also reach out to the National Domestic Violence Hotline if you don’t feel safe in your current relationship.